How to Efficiently Scrape Wikipedia Data: A Complete Guide

Wikipedia is one of the largest and most popular online encyclopedias in the world, offering a wealth of information and content. Many developers and researchers need to scrape data from Wikipedia for analysis or to store it in their own databases. If you have similar needs, this article will help you understand how to scrape Wikipedia and some best practices and common methods.

What is Wikipedia Scraping?

Wikipedia scraping refers to the process of extracting content from Wikipedia using programming techniques. This typically involves "web scraping" technology to extract text, images, links, and other useful data from web pages. You can use various tools and libraries to automate the scraping process and store Wikipedia data locally for later analysis and use.

Why Scrape Wikipedia?

There are many uses for scraping Wikipedia content, especially in fields like data analysis, natural language processing, and machine learning. Here are some common use cases:

Academic Research: Many researchers scrape Wikipedia to analyze the knowledge structure of different topics, track changes to articles, and study editing activity.

Building Databases: Some developers might want to store Wikipedia content in their own databases for local querying or to combine it with other data for analysis.

Automation Tools: Some automation tools or applications need to regularly scrape up-to-date information from Wikipedia and present it in a structured manner.

There are several ways to scrape Wikipedia, and here are some common techniques and tools:

Using Wikipedia Dumps

Wikipedia provides large Wikipedia dumps, which contain all the pages' content, including text, image links, historical versions, and more. You can download these dumps directly and import them into your local database, without having to scrape the website every time.

Steps to Download:

Visit Wikipedia Dumps.

Choose the language version and the data type you want (usually XML format).

Download the file and parse the content as needed.

This method is ideal for users who need a lot of static data, but it’s not suitable if you need to retrieve real-time updates.

Using the API to Scrape

Wikipedia offers a free API that developers can use to scrape page content. The Wikipedia API allows you to fetch specific page content, historical versions, and other details via HTTP requests. This method is great for applications that require regularly updated data.Sure! Here's a more detailed version of the section on using the Wikipedia API to scrape data:

Wikipedia offers a robust and free API that allows developers to scrape or interact with Wikipedia’s content in a structured and efficient way. Unlike web scraping, which requires parsing HTML from web pages, the Wikipedia API provides structured data in formats like JSON or XML, making it much easier for developers to work with. This method is particularly useful when you need to fetch specific page content, historical versions, links, categories, or even related metadata, all while avoiding the need to download large amounts of raw HTML.

The Wikipedia API is a great choice for applications or projects that need regularly updated data from Wikipedia without overloading their servers with unnecessary requests. It provides direct access to Wikipedia’s vast database, and because the data is already structured, you can spend more time analyzing it rather than cleaning it.

Basic Usage:

To get started with the Wikipedia API, you don’t need to install any special libraries or tools—everything is done through simple HTTP requests. However, if you’re using a programming language like Python, there are also convenient libraries like requests or pywikibot that can make working with the API easier.

1. Understand the API Structure

The Wikipedia API is built around a set of endpoints, which correspond to different kinds of information you may want to retrieve. For example, if you want to get the content of a specific page, you would call the action=query endpoint. If you’re interested in historical versions of an article, you might use the action=revisions endpoint.

The basic structure of a Wikipedia API request looks like this:

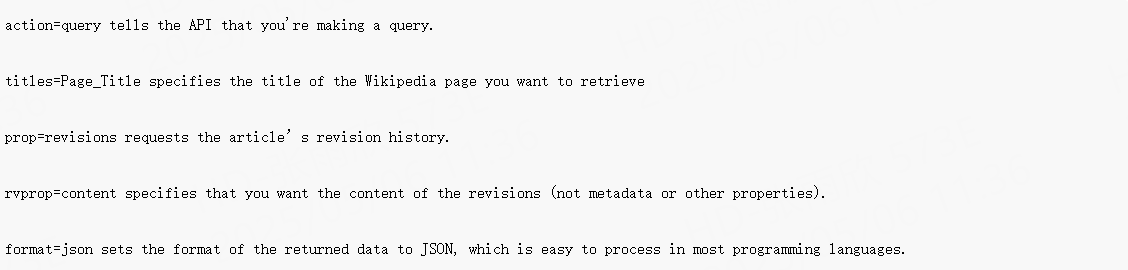

In this example:

2. Make an API Request

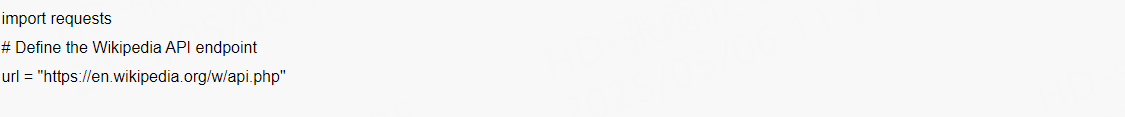

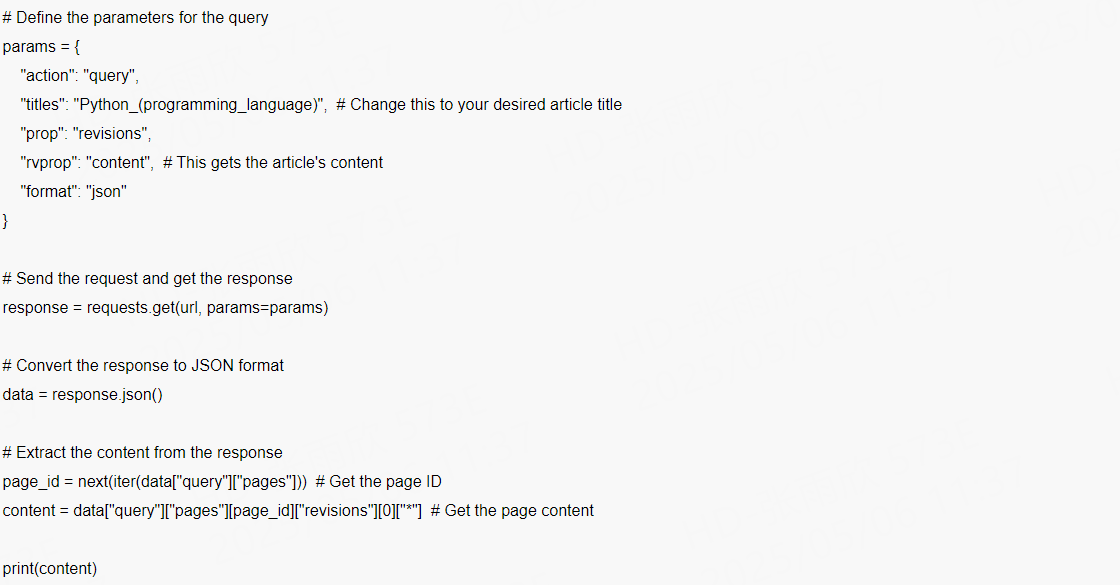

To retrieve content using the API, you can send a simple GET request to the above URL. Here’s an example in Python:

This code sends a GET request to the Wikipedia API and retrieves the content of the page titled "Python (programming language)". It then extracts and prints the content from the API response.

3. Parse the Data

The API will return the data in a structured format like JSON or XML. JSON is generally preferred because it’s easy to work with in most modern programming languages. For instance, the response from the above query would look something like this:

{

"query": {

"pages": {

"23862": {

"revisions": {

{

"content": "Python is an interpreted, high-level programming language..."

}

]

}

}

}

}

You can then easily access the article's content, history, categories, or other relevant information from this structured response.

4. Handling Multiple Requests

If you're working with a large number of pages, you might need to handle multiple API requests. Wikipedia’s API allows you to request content from several pages in a single query by providing a list of titles. Here’s an example of how you might request multiple pages in one API call:

params = {

"action": "query",

"titles": "Python_(programming_language)|JavaScript", # Multiple titles separated by |

"prop": "revisions",

"rvprop": "content",

"format": "json"

}

response = requests.get(url, params=params)

data = response.json()

# Process each page's content

for page_id, page_info in data["query"]["pages"].items():

content = page_info["revisions"][0]["*"]

print(content)

5. Dealing with Rate Limiting

Wikipedia’s API has usage limits in place to prevent excessive load on their servers. If you make too many requests in a short amount of time, you may encounter rate limiting, where your requests are temporarily blocked. The API typically returns an error message with details on when you can try again. To avoid this, you can include a User-Agent header in your requests to identify yourself and respect Wikipedia’s usage guidelines. If you're working on a larger project, it may be wise to implement automatic retries or time delays between requests.

Why Use the Wikipedia API?

The Wikipedia API is ideal for retrieving up-to-date, structured content directly from Wikipedia, without the need to scrape raw HTML. This method is especially useful for applications that require regular updates, such as news aggregators, research projects, or machine learning models.

Structured Data: The API returns data in JSON or XML formats, which makes it easy to process and analyze.

Regular Updates: The API provides live data, so you can access the most recent content and revisions without waiting for bulk data dumps.

Ease of Use: With just a few lines of code, you can retrieve specific content from any page on Wikipedia.

Customization: The API allows you to customize your requests to include different types of data, such as revision history, categories, and metadata.

Using the Wikipedia API to scrape data is a great solution if you need structured, regularly updated content. By sending simple HTTP requests, you can retrieve data on specific articles, their revision histories, and other metadata in formats that are easy to process. Whether you’re building a research project, a data analysis pipeline, or a content aggregator, the Wikipedia API is a powerful tool that can help you access the wealth of knowledge stored on Wikipedia.

This expanded version goes into more detail about the API's capabilities, how to use it, and how to handle different scenarios like rate limiting and multiple page requests. Let me know if you need further details or examples!

Web Scraping

If you prefer not to rely on Wikipedia Dumps or the API, another option is to scrape the data directly from the Wikipedia website using web scraping techniques. You can use libraries like BeautifulSoup or Scrapy in Python to parse HTML pages and extract text, images, and other elements.

Basic Steps:

Choose the page you want to scrape: Decide on the specific Wikipedia page you need data from.

Send a Request: Use Python’s requests library to send a request to the page and retrieve the HTML content.

Parse the HTML: Use tools like BeautifulSoup to parse the HTML structure and extract the needed information.

Store the Data: Save the extracted data to a database or file for later use.

This method lets you extract data from any page, but you need to be mindful of Wikipedia’s terms of use and avoid overloading their servers.

Using Existing Wikipedia Scraper Tools

If you don’t want to write code from scratch, you can use existing Wikipedia scraper tools. These tools typically provide simple interfaces that allow you to quickly scrape Wikipedia content and import it into a database.

Common tools include:

WikiScraper: A simple-to-use tool that supports scraping Wikipedia pages.

Pywikibot: A Python library that helps interact with Wikipedia, including scraping data and editing pages.

Piaproxy: Automatically switches IP to avoid being blocked, supports multi-region IP, obtains accurate regional data, and has unlimited traffic, so long-term tasks are worry-free.

Conclusion

Scraping Wikipedia data can provide a wealth of content for various projects, whether for academic research, application development, or data analysis. Depending on your needs, you can choose methods like using Wikipedia Dumps, the API, or direct web scraping. Whichever method you use, ensure you follow Wikipedia’s terms of service and respect their servers.